Server Build 2.0 Part 3 – Proxmox and Docker

Previously, I talked about setting up the network in my new homelab. In this article I’ll briefly cover Proxmox, and how I have it setup, but mostly talk about Docker and some of the fun and neat things I do with it.

My server is an HP ProDesk G5 600 SFF computer that I found for a great price at a local computer repair/recycling shop. It came with no hard drives, and no RAM. It did come with a 9th gen i7, though. I maxed it out at 128 gigs of RAM. I added 2 x 4tb SSDs in a RAIDZ. It also has a cheap 256gig NVME for Proxmox itself. What does all of that mean? Well, it means that I have enough memory to run a bunch of things at once. The 4tb SSDs are mirrored, so if one gets damaged, my data isn’t lost. The filesystem is ZFS, which should have “self healing” properties.

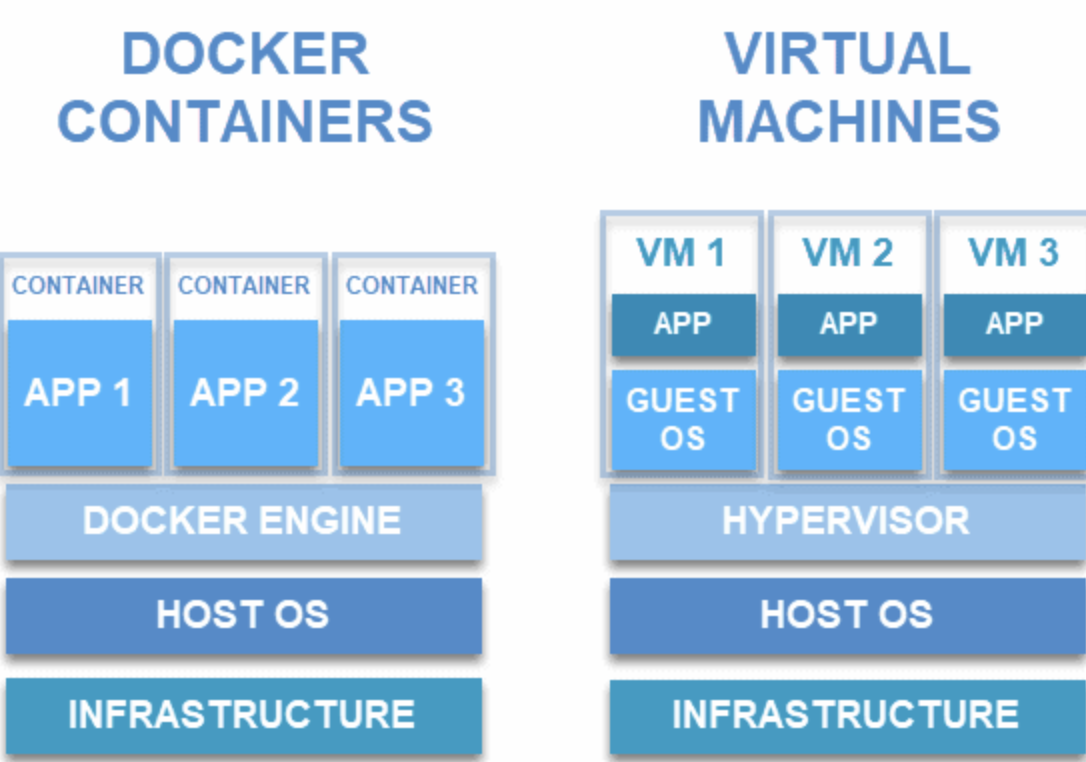

Proxmox is essentially the same thing as Xen from my first server. A hypervisor is a very bare bones operating system whose primary job is to run virtual machines (VMs). This enables you to have multiple systems running at the same time, on the same computer. I don’t have a problem with Xen. It was reliable. It did what I wanted. My laptop is running QubesOS, which is based on Xen. But they got rid of the free community edition, and Proxmox became the go to hypervisor for selfhosting.

Installing Proxmox is basically the same as installing any other operating system. I just had to keep track of which ethernet port was which. I assigned eth0 to VLAN1 (management) and eth1 to VLAN20 (everyday usage). Then in each VM, I can pick and choose which network it uses.

Docker is a platform for running “containerized” applications or services. A container is basically a software package that “contains” all of the necessary files. You can easily move it from one computer to the next, and as long as it’s running Docker, everything should be ready to go. You don’t have to worry about missing dependencies or OS specific “quirks”. One important thing to note is that the container is kind of locked. Yes, you can make tweaks to it, but to keep this article very simple, it’s presetup. You can then use local storage, and link that to the container. That way, if you update the container to a new version, you don’t lose your own files. I use this with NextCloud. Even if I deleted NextCloud, my files would still be there. This also enables me to link those same files to multiple containers. That’s how Immich can see the same photos that are on NextCloud. Here’s a fake directory structure as an example.

/home

/home/docker

/home/docker/nextcloud

/home/docker/immich

/home/photos

Then in Docker, each container links to the same photos directory. If I delete the NextCloud directory, the photos are untouched.

I currently have 4 VMs. A Windows VM for testing on VLAN20, a VM running Ubuntu Mate for NextCloud and Immich on VLAN1, a VM running Ubuntu Mate for the Arrstack on VLAN20, and a VM running Ubuntu Mate for everything else on VLAN1. (I love Ubuntu Mate) This last one is the “main” Docker hub. Each VM runs Docker and Portainer. Portainer is a management software for Docker. It’s got a pretty GUI so you’re not completely using CLI. All but the main hub are the Portainer Agents, which funnel all the information to the main Portainer. Separately, I run Docker and a Portainer Agent on my Synology NAS. Also, a quick shout out to Dr. Frankenstein’s amazing tutorials, which really started me down this path.

Now, why did I create so many VMs to do basically the same thing? Well, two reasons. One, VLANs. I want the Arrstack on VLAN20, while almost everything else is on VLAN1. Two, I didn’t make the Main Hub VM big enough, and by the time I noticed, I had installed and configured way too many things, so I decided to make NextCloud it’s separate thing. Then I learned about Immich, and since it’s accessing the same files as NextCloud, I put it there. Meanwhile, all of my media is on the NAS, so it’s running it’s own containers. Mainly Jellyfin, so it can stream files directly from the NAS, instead of being on a VM and THEN streaming from the NAS. The media files are linked to the Arrstack VM through editing /etc/fstab, so the same rules apply. If I delete a container, the files are left alone.

What’s the difference between a container and a virtual machine? Well, a virtual machine has set resources. If you specify 8 gigs of RAM and 2 CPU cores, it will always take 8 gigs of RAM and 2 CPU cores from your host system, whether it’s doing anything or not. With a container, not only are the resources much lower because you’re just running a single program, but it’s also managed more efficiently. Containers run on the host system, while VMs are isolated. Thus, putting Docker INSIDE a VM gives you that isolation, albeit at a slight resource hit. Not enough for you to notice, though. Proxmox has the ability to run containers directly, but that is pretty much universally avoided due to the security implications.

With all of this running, my server averages about 70 gigs of RAM, maybe 30% CPU, and barely any storage.

Next, I wanted to keep a backup of things. Proxmox does have the ability to make automated backups, but I found that that quickly used up a ton of space. The issue is that it backs up the entire VM, and I don’t really need that. I can reinstall an OS in 15 minutes. Since everything is installed in the /home directory, that’s all I need to keep. There are a lot of options out there, but I remember using Duplicati in the past, and so I set that up. Each VM is backed up once a week to the NAS. It’s a smart backup, so it only backs up files if they change, to save space. And it deletes old backups, but keeps like 4 weekly backups, 1 every month, 1 every year, etc. Then the NAS is set to backup everything to an external hard drive. In an ideal world, I’d have an offsite backup, as well, but don’t have the money for that right now.

So what can you do with this? Everything. Obviously, I’m running two websites. Migrating from WordPress on my old hosting package to the new one was pretty straight forward. There are plugins that do the heavy lifting, and the only thing I had to do was rebuilt the thumbnails. I had considered switching to Ghost, but all of the image links failed, and I just didn’t want to put that much effort into it. I have my own speedtest server, from OpenSpeedTest. I use UptimeKuma to track each service to let me know if anything goes down. Homarr is a sort of “homepage” that shows all of the services and allows me to click on them to open them, instead of having to remember IP addresses and ports. Jellyfin allows me to stream media. The Arrstack “purchases” that media. NextCloud hosts files that I might need away from home. I really would have liked to have put that on my NAS, but it required too many resources. Immich is an photo manager that includes locally run identification. So I can search for “car” and it will show any photos I have of cars. It’s not perfect, but it is really close. It also allowed me to clear out duplicates, saving over 20 gigs of files. Kiwix allows you to host your own copy of WikiPedia (and other databases like that). The databases are updated periodically, but are often HUGE files. Still, when I sail away, it will be nice to have a full backup of WikiPedia. I have WatchTower installed on each separate VM, which is a Docker container for automatically updating other Docker containers.

1337 words so far.

I had considered running my own email service. It is possible, but there are a lot of things to consider, and I don’t trust my skills yet. Email is one of those things that you need to work constantly, and I don’t want to deal with it going down or something. If you ever meet someone who selfhosts their email, buy them a drink, because they deserve it.

At this point, I have just about everything I think I’d need. That said, there are so many clever people out there, and who knows where this technology will go. Some people have multiple servers which allows “high availability”. That means if one server has to go down for a reboot, everything is copied over another server first, so there’s no downtime. As much as I’d love to learn how to do that, I don’t have the money. Still, I’m so amazed at what is possible. I have an unused 3rd ethernet port on my server, so maybe I can use that to install a Network Intrusion Detection System, like Security Onion. Which is available in Docker. And all of this software is free, though you can donate to most of the developers.

Hmm. Wazuh tracks everything, even when services run updates.